Tutorial

Neural networks

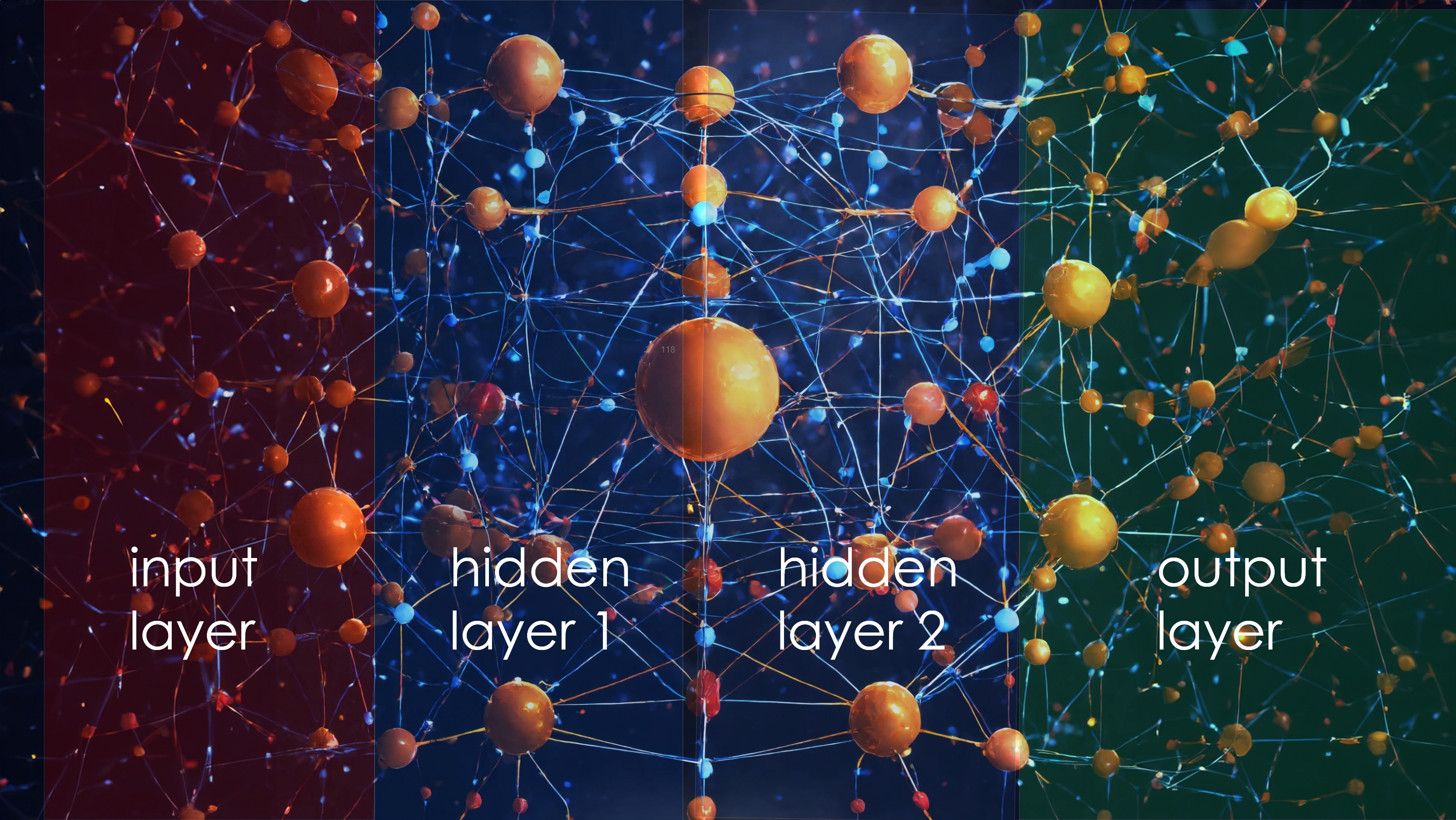

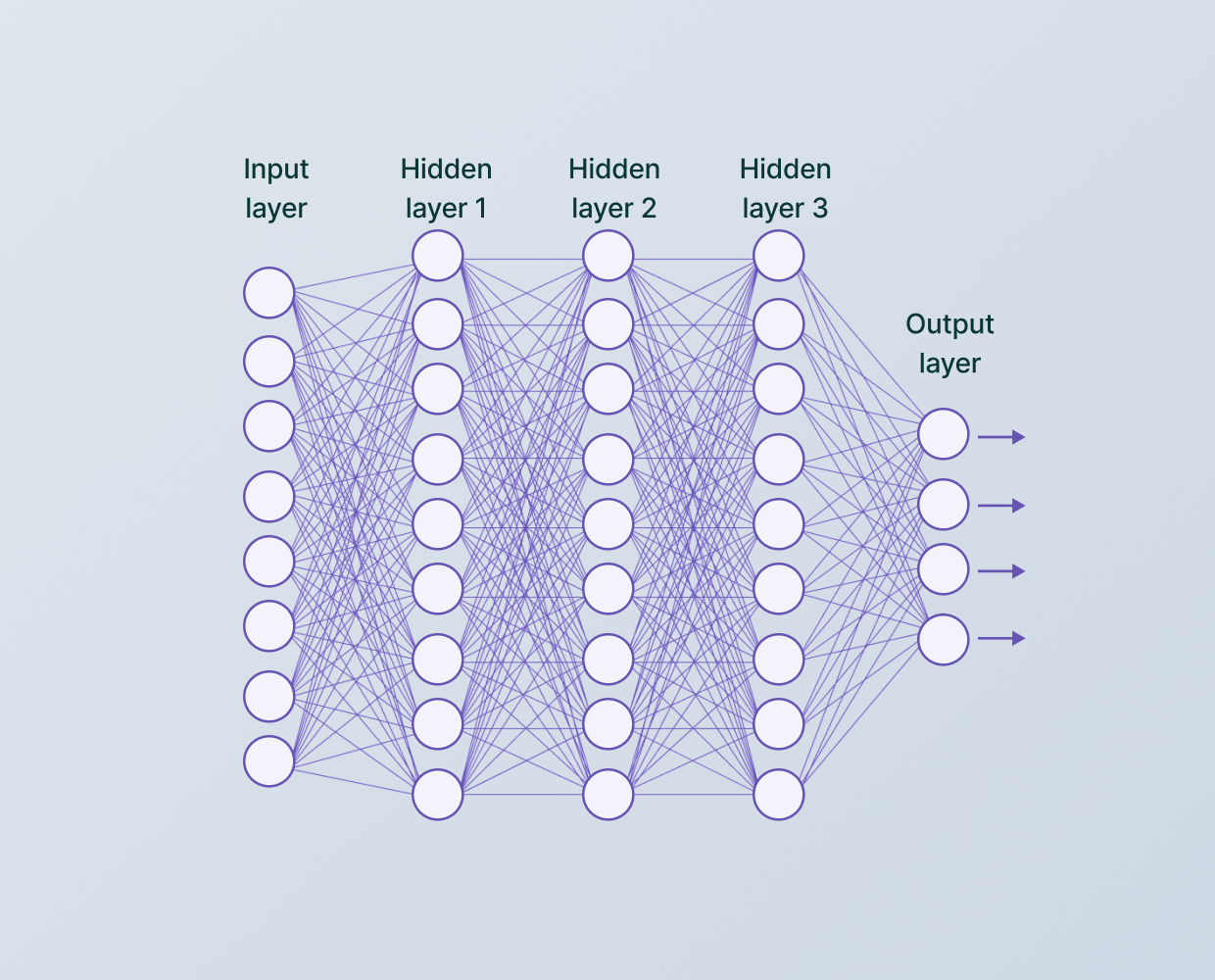

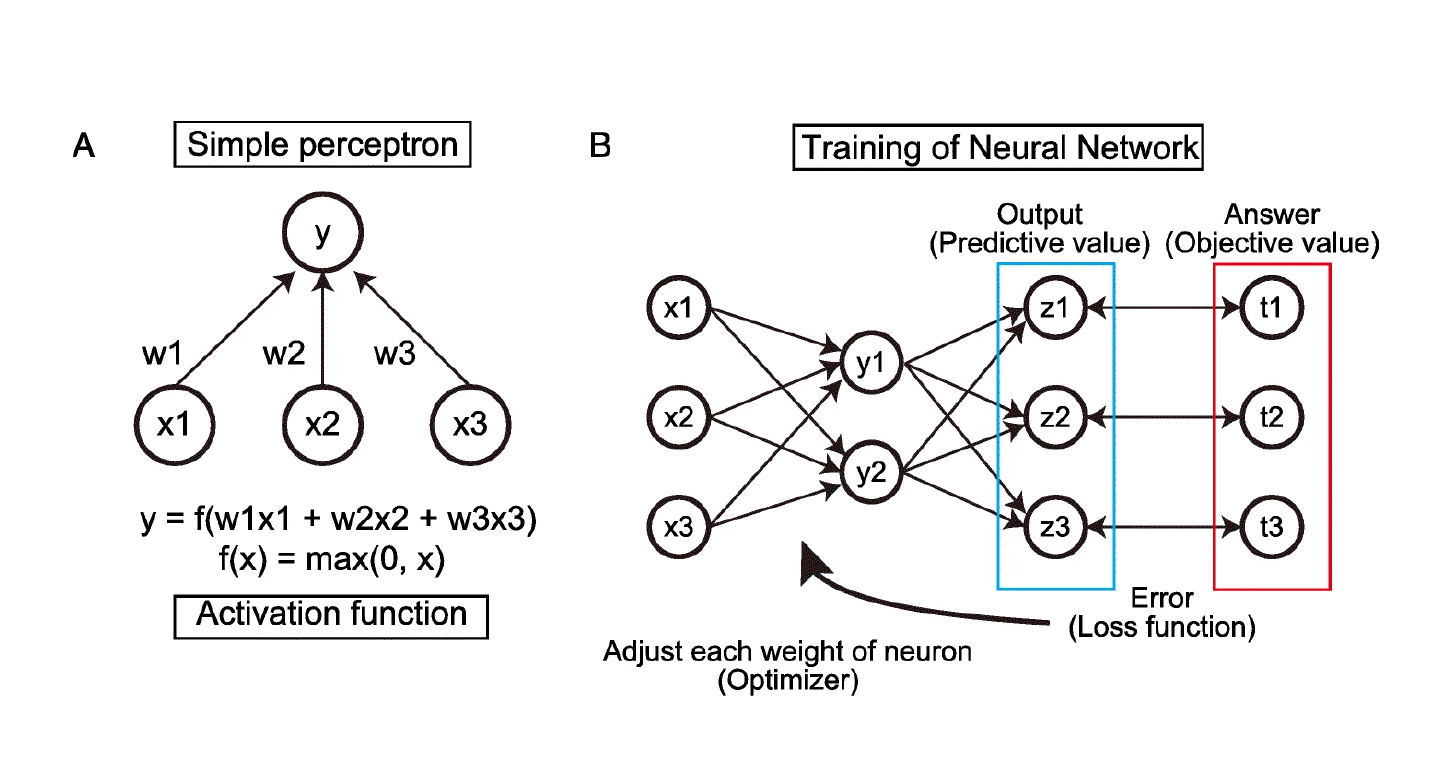

Neural networks, also known as artificial neural networks (ANNs), are a computational model inspired by the structure and functioning of biological neural networks in the human brain. They consist of interconnected nodes, called artificial neurons or "units," organized in layers. Each unit receives input signals, performs computations, and produces an output signal that is passed on to the next layer.

There are different types of neural networks, including:

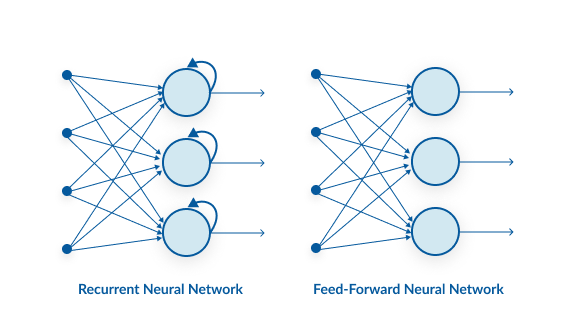

1. Feedforward Neural Networks (FNN): These are the most common type of neural networks, where the flow of information occurs in one direction, from input to output. They consist of an input layer, one or more hidden layers, and an output layer. FNNs are used for tasks like classification, regression, and pattern recognition.

2. Convolutional Neural Networks (CNN): CNNs are primarily used for image and video processing tasks. They employ specialized layers, such as convolutional layers, pooling layers, and fully connected layers, to efficiently process and analyze visual data. CNNs have been highly successful in tasks like image classification, object detection, and image segmentation.

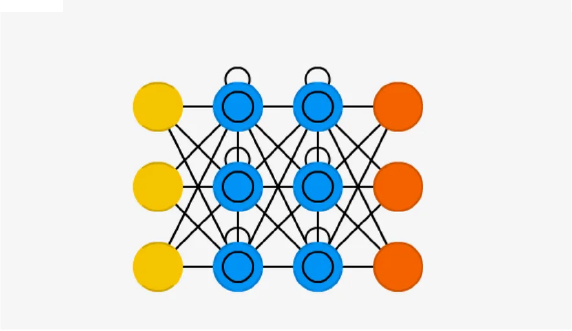

3. Recurrent Neural Networks (RNN): RNNs are designed to process sequential data, where the output at each step is influenced not only by the current input but also by previous input and the network's internal state. This makes them suitable for tasks like speech recognition, language modeling, and time series analysis.

4. Long Short-Term Memory (LSTM) Networks: LSTM networks are a type of RNN that address the "vanishing gradient" problem, allowing better long-term dependencies modeling. They are commonly used when preserving contextual information over longer sequences is essential, such as in machine translation or text generation tasks.

Applications of neural networks:

Neural Networks Images

Feedforward Neural Networks

Convolutional Neural Networks (CNN)

Recurrent Neural Networks (RNN)

Long Short-Term Memory (LSTM)

Regenerative Neural Networks

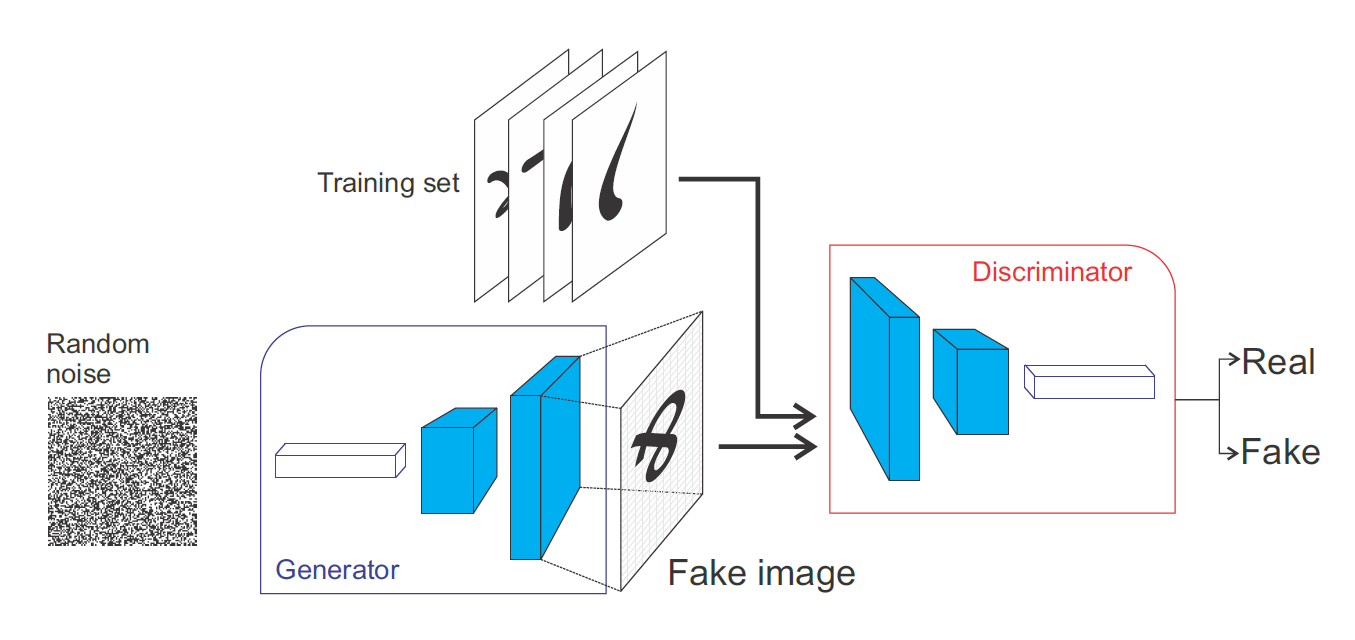

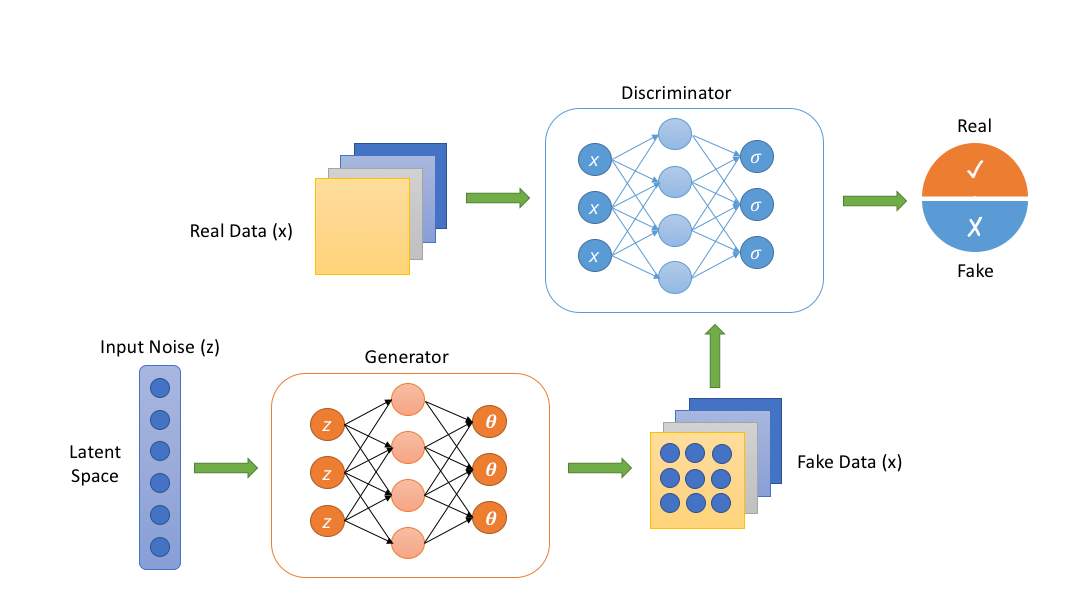

Regenerative Adversarial Networks

Generative Adversarial Network - GAN

try it

Tinker With a Neural Network Right Here in Your Browser.

Don’t Worry, You Can’t Break It. We Promise.